Approximating Gradients for

Differentiable Quality Diversity in

Reinforcement Learning

GECCO 2022 — 11 July 2022

Bryon Tjanaka

University of Southern California

tjanaka@usc.edu

Matthew C. Fontaine

University of Southern California

mfontain@usc.edu

Julian Togelius

New York University

julian@togelius.com

Stefanos Nikolaidis

University of Southern California

nikolaid@usc.edu

Website

https://dqd-rl.github.io

"Normal"

Front

Back

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

Front: 40%

Back: 50%

QD score=i=1∑Mf(ϕi)

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

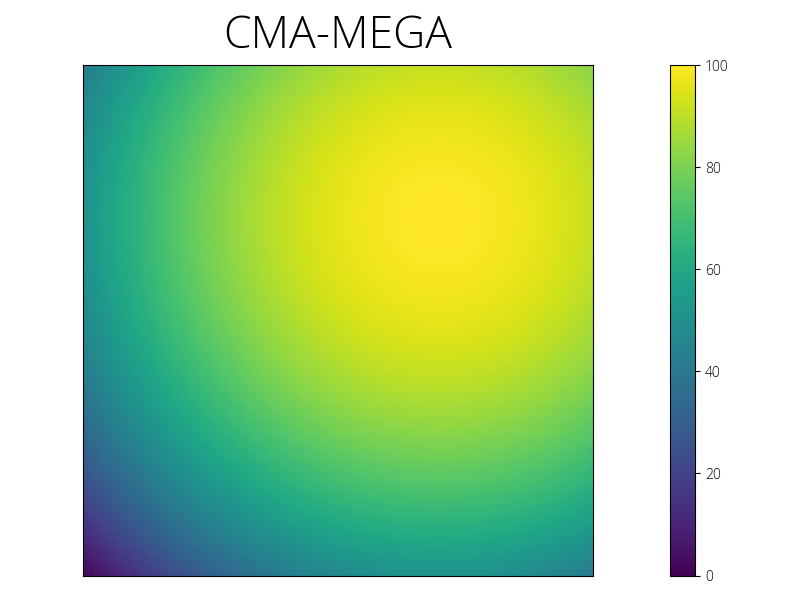

CMA-MEGA

Key Insight: Search by following objective and measure gradients.

Fontaine and Nikolaidis 2021, "Differentiable Quality Diversity." NeurIPS 2021 Oral.

CMA-MEGA

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

Policy Gradient Assisted MAP-Elites

(PGA-MAP-Elites)

O. Nilsson and A. Cully 2021. "Policy Gradient Assisted MAP-Elites." GECCO 2021.

Inspired by

PGA-MAP-Elites!

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

Problem: Exact gradients are unavailable!

Solution: Approximate ∇f and ∇m.

| DQD | QD-RL |

|---|---|

Exact Gradients | Approximate Gradients |

CMA-MEGA | CMA-MEGA with |

Approximating ∇f

↑

Expected discounted return

Off-Policy Actor-Critic Method (TD3)

S. Fujimoto et al. 2018, "Addressing Function Approximation error in Actor-Critic Methods." ICML 2018.

Evolution Strategy (OpenAI-ES)

Salimans et al. 2017, "Evolution Strategies as a Scalable Alternative to Reinforcement Learning" https://arxiv.org/abs/1703.03864

Approximating ∇m

↑

Black Box

| CMA-MEGA (ES) | CMA-MEGA (TD3, ES) | |

|---|---|---|

| ∇f | ||

| ∇m |

CMA-MEGA (ES) & CMA-MEGA (TD3, ES)

CMA-MEGA (ES) & CMA-MEGA (TD3, ES)

CMA-MEGA (ES) & CMA-MEGA (TD3, ES)

CMA-MEGA (ES) & CMA-MEGA (TD3, ES)

CMA-MEGA (ES) & CMA-MEGA (TD3, ES)

CMA-MEGA (ES) & CMA-MEGA (TD3, ES)

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

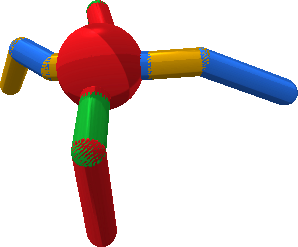

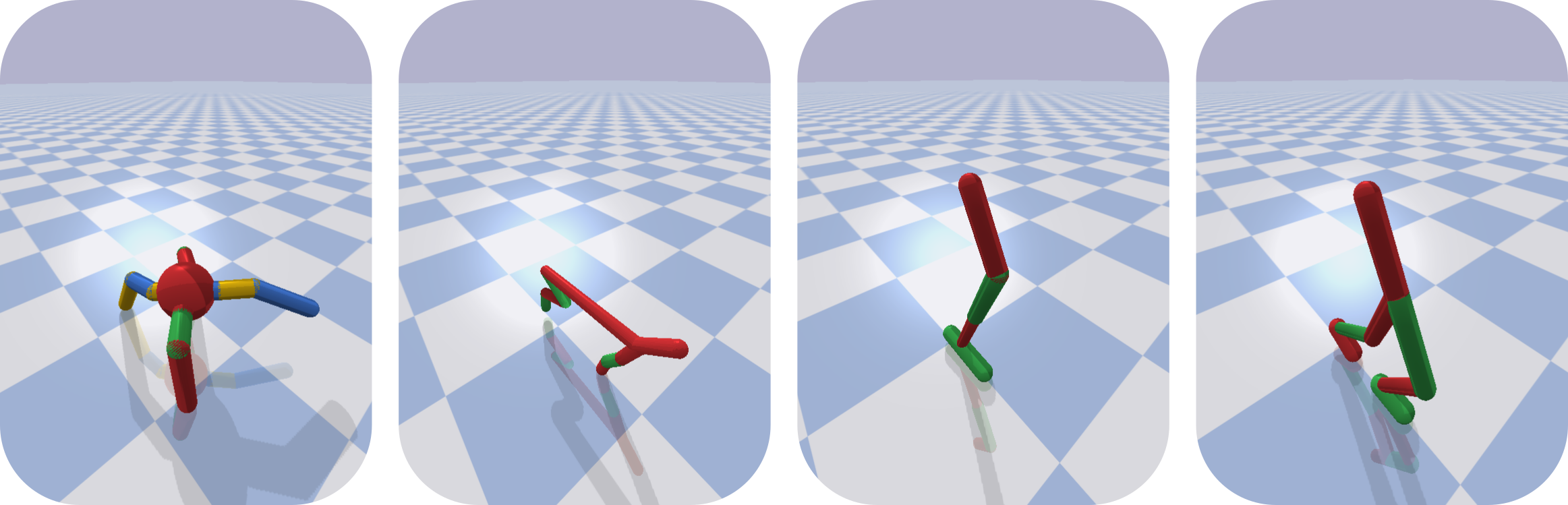

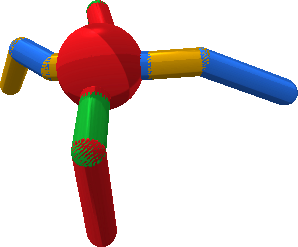

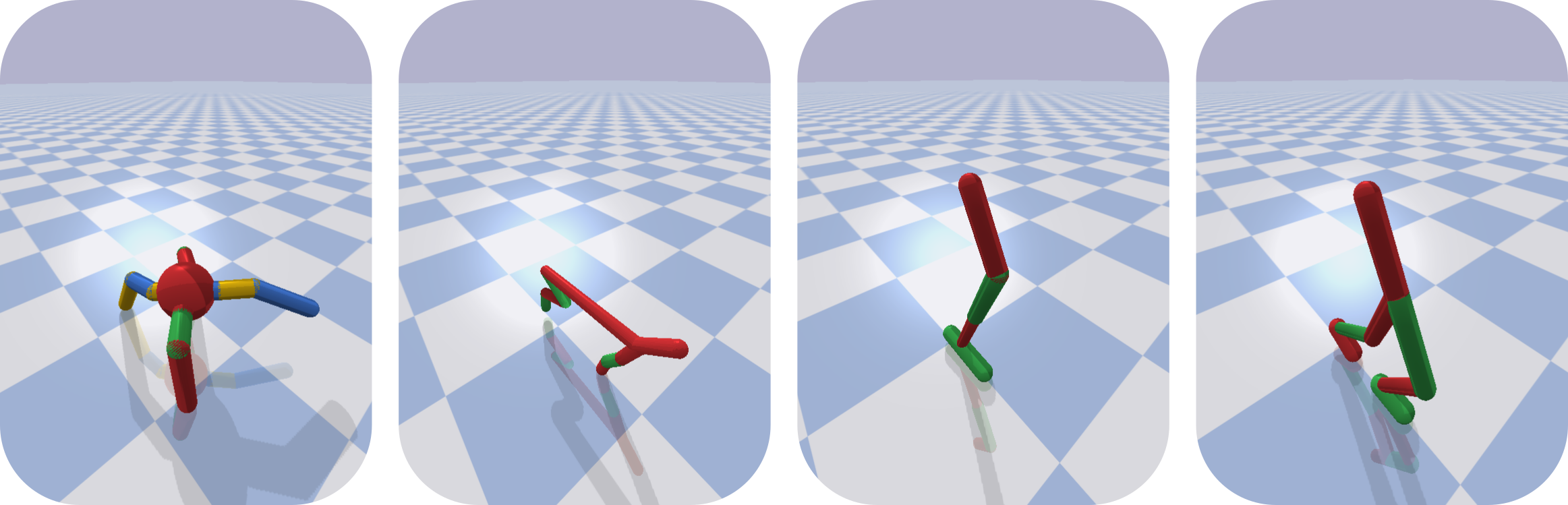

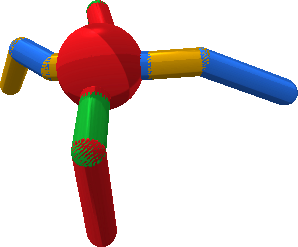

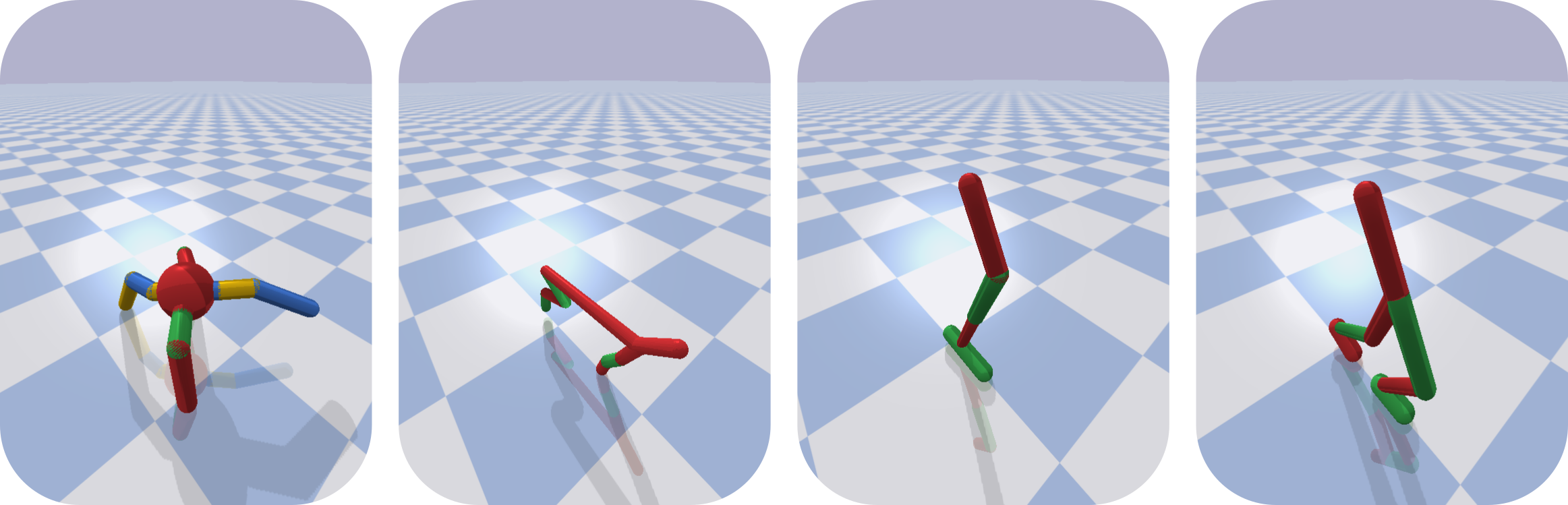

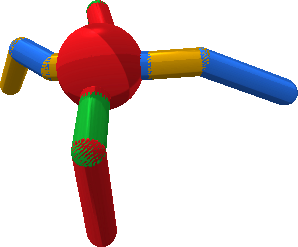

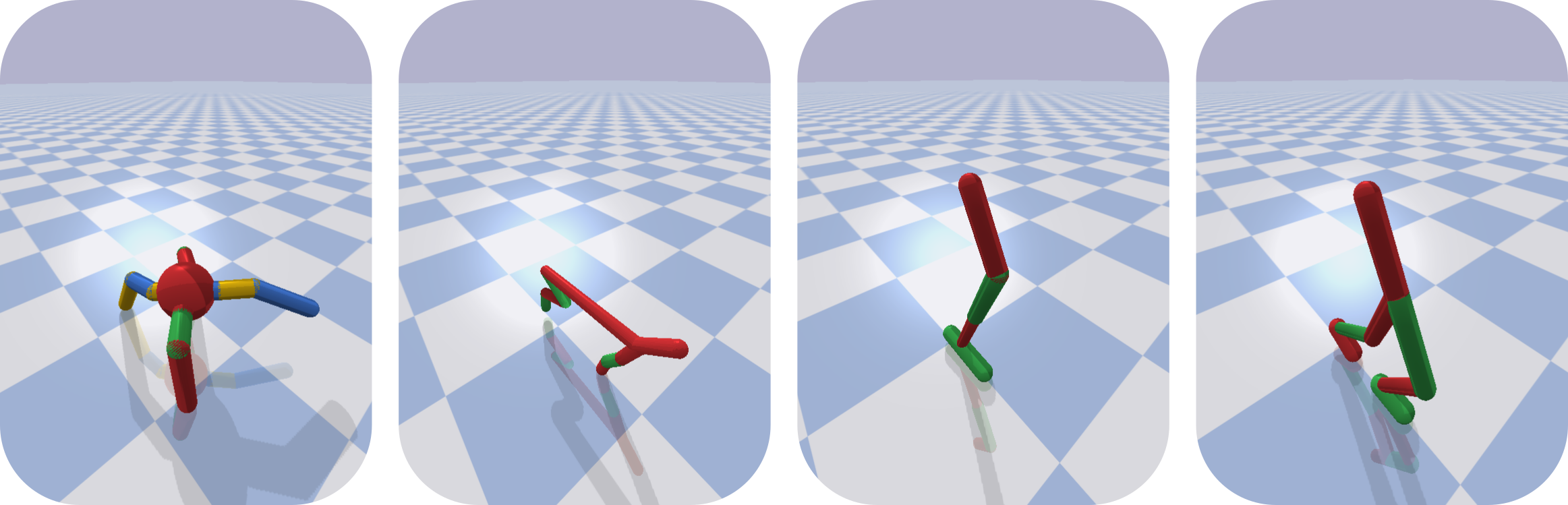

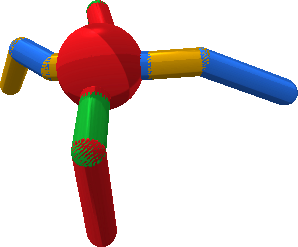

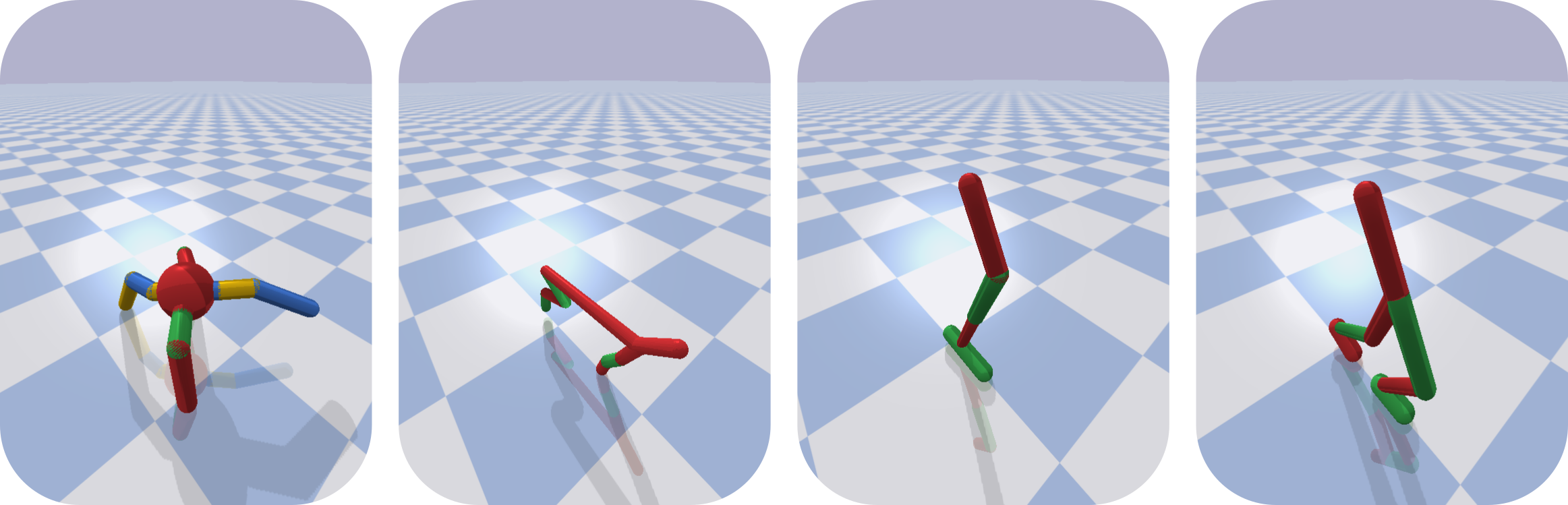

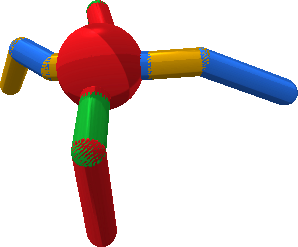

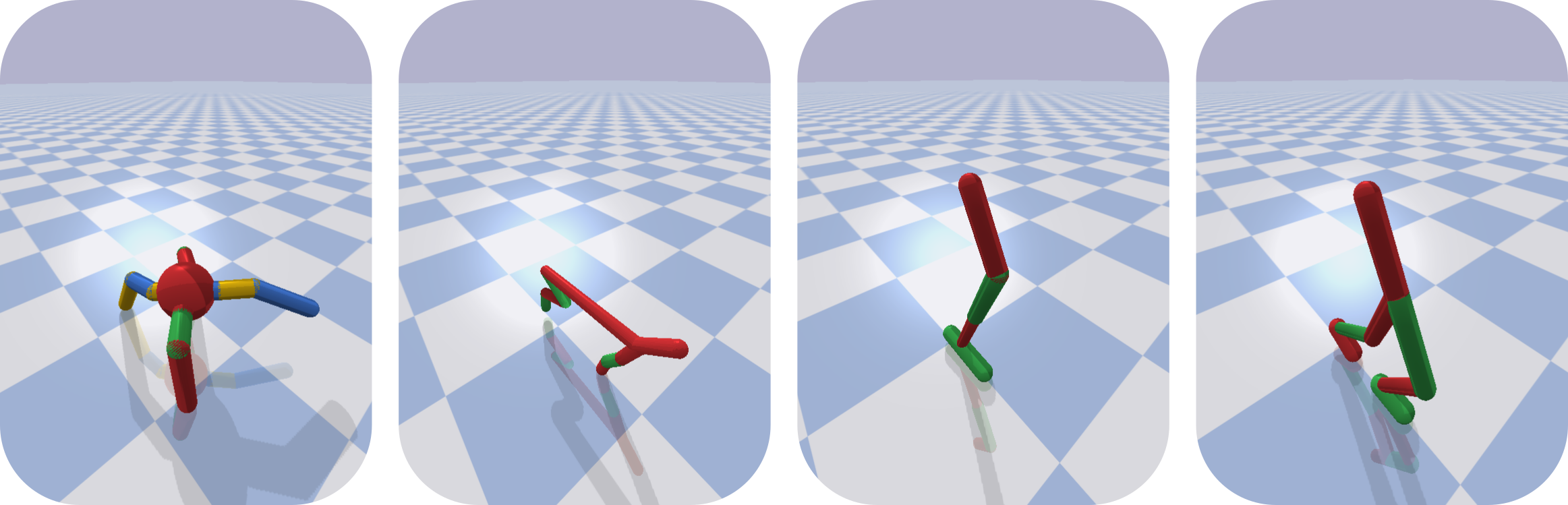

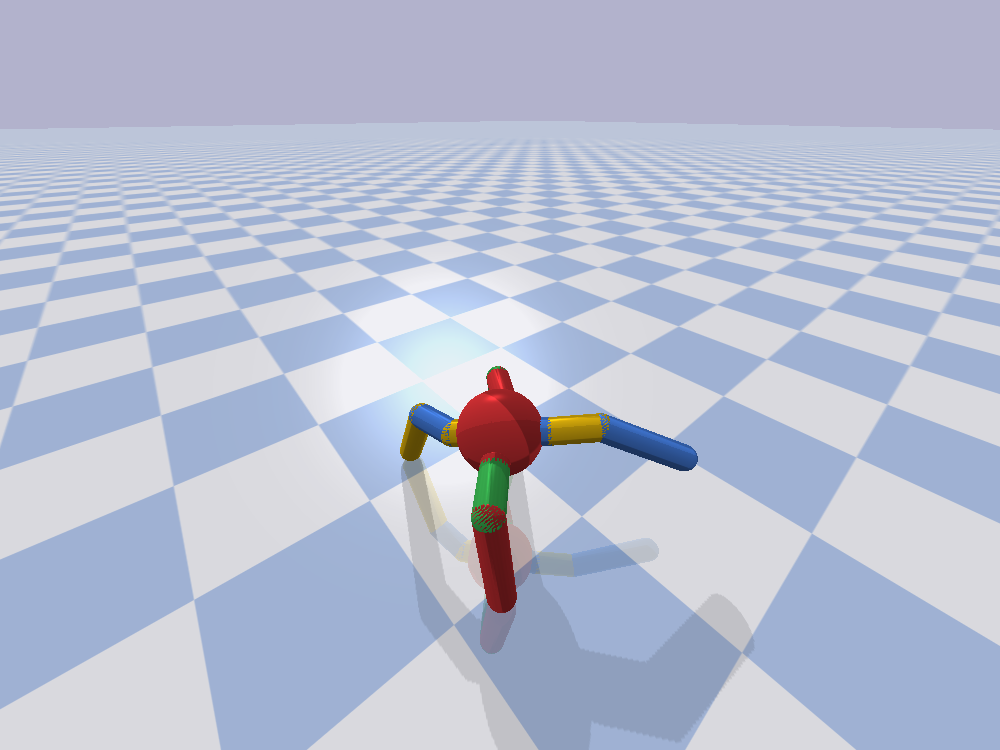

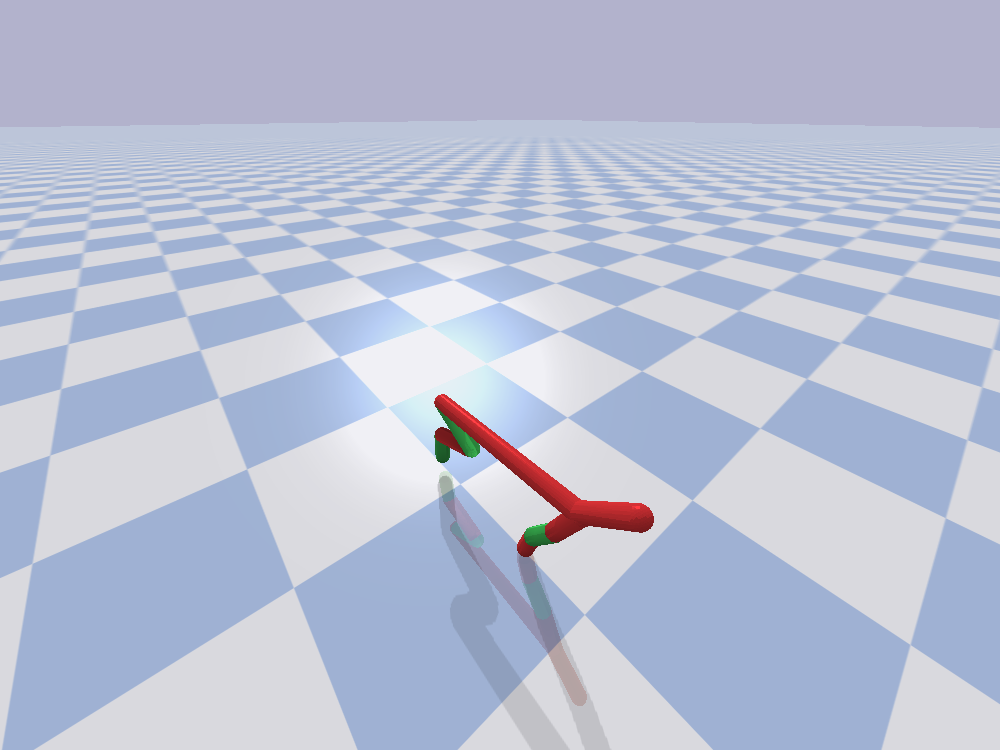

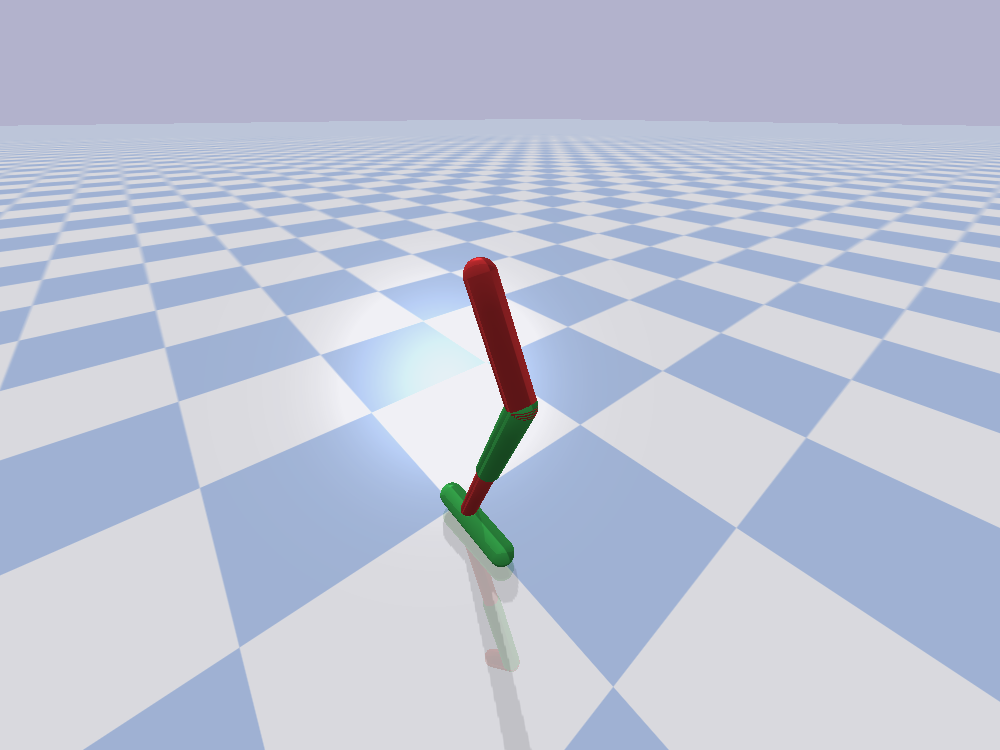

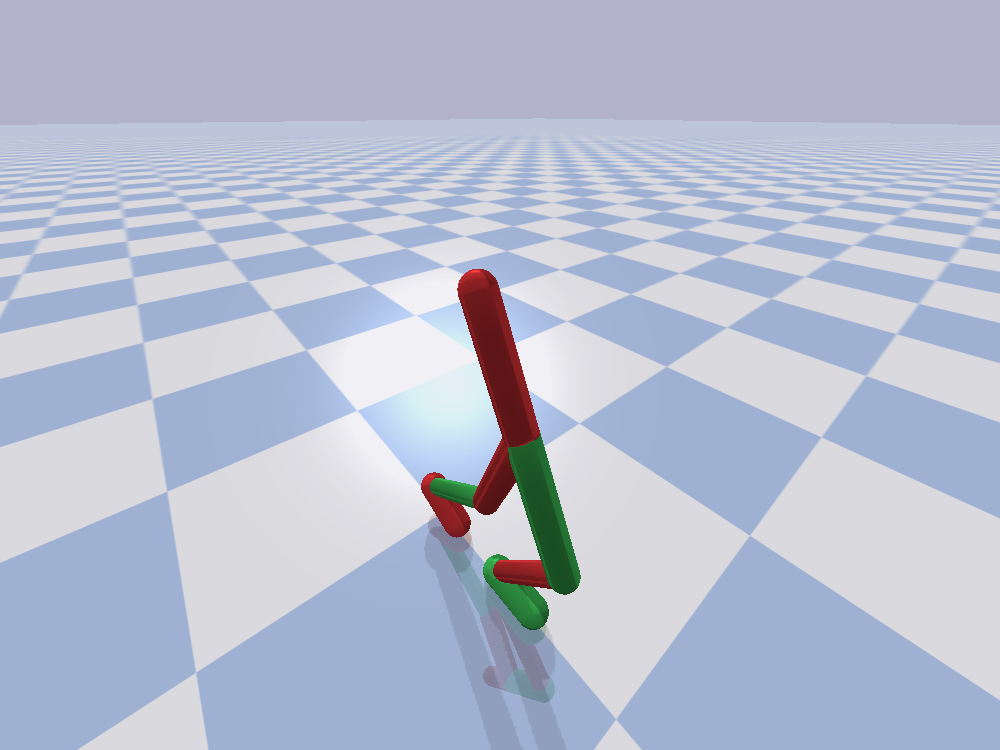

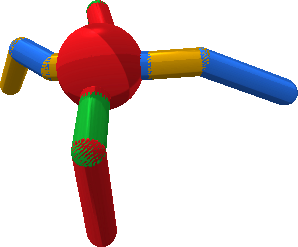

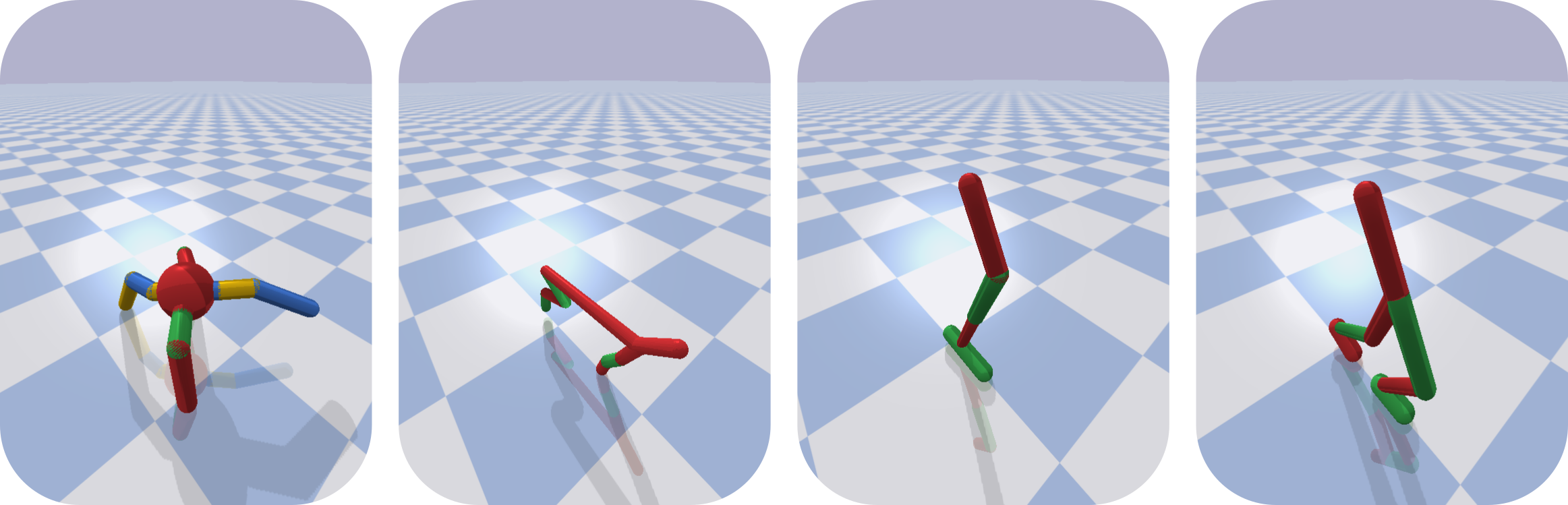

QD Ant

QD Half-Cheetah

QD Hopper

QD Walker

Independent Variables

- Algorithm:CMA-MEGA (ES), CMA-MEGA (TD3, ES),

PGA-MAP-Elites, MAP-Elites, ME-ES - Environment:QD Ant, QD Half-Cheetah, QD Hopper, QD Walker

Dependent Variable

- QD Score

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

| CMA-MEGA (ES) | CMA-MEGA (TD3, ES) | |

|---|---|---|

| PGA-MAP-Elites | Comparable on 2/4 | Comparable on 4/4 |

| MAP-Elites | Outperforms on 4/4 | Outperforms on 4/4 |

| ME-ES | Outperforms on 3/4 | Outperforms on 4/4 |

Inspired by

PGA-MAP-Elites!

OG-MAP-Elites

(DQD Benchmark Domain)

Easy objective, difficult measures

PGA-MAP-Elites

(QD Walker)

Difficult objective, easy measures

| PGA-MAP-Elites | CMA-MEGA (ES), CMA-MEGA (TD3, ES) | |

|---|---|---|

| Objective Gradient Steps | 5,000,000 | 5,000 |

Approximating Gradients for

Differentiable Quality Diversity in

Reinforcement Learning

GECCO 2022 — 11 July 2022

Bryon Tjanaka, Matthew C. Fontaine,

Julian Togelius, Stefanos Nikolaidis

Quality Diversity (QD)

Differentiable Quality Diversity

(DQD)

Quality Diversity for Reinforcement Learning (QD-RL)

Approximating Gradients

for DQD in RL

Experiments

Results

Supplemental

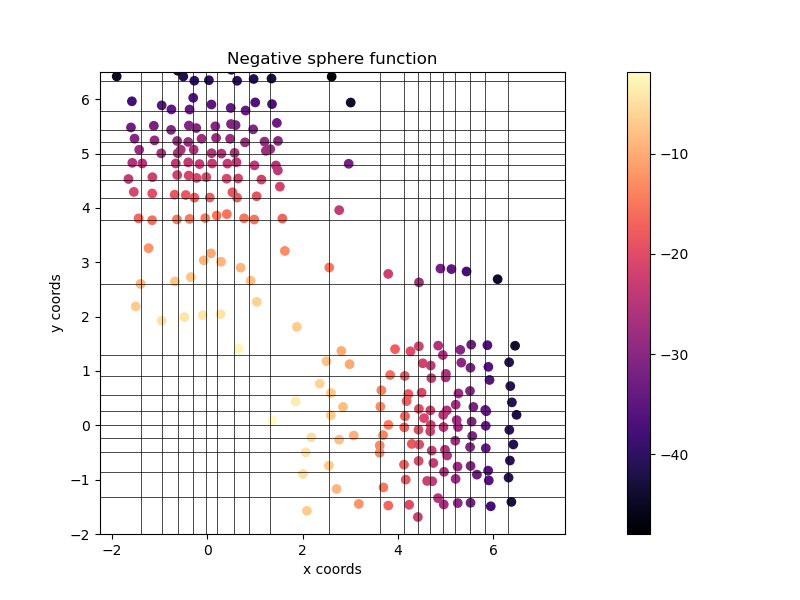

DQD Benchmark

sphere(ϕ)=i=1∑n(ϕi−2.048)2 clip(ϕi)={ϕi5.12/ϕiif −5.12≤ϕi≤5.12otherwise m(ϕ)=⎝⎛i=1∑⌊2n⌋clip(ϕi),i=⌊2n⌋+1∑nclip(ϕi)⎠⎞

Fontaine et al. 2020, "Covariance Matrix Adaptation for the Rapid Illumination of Behavior Space." GECCO 2020.

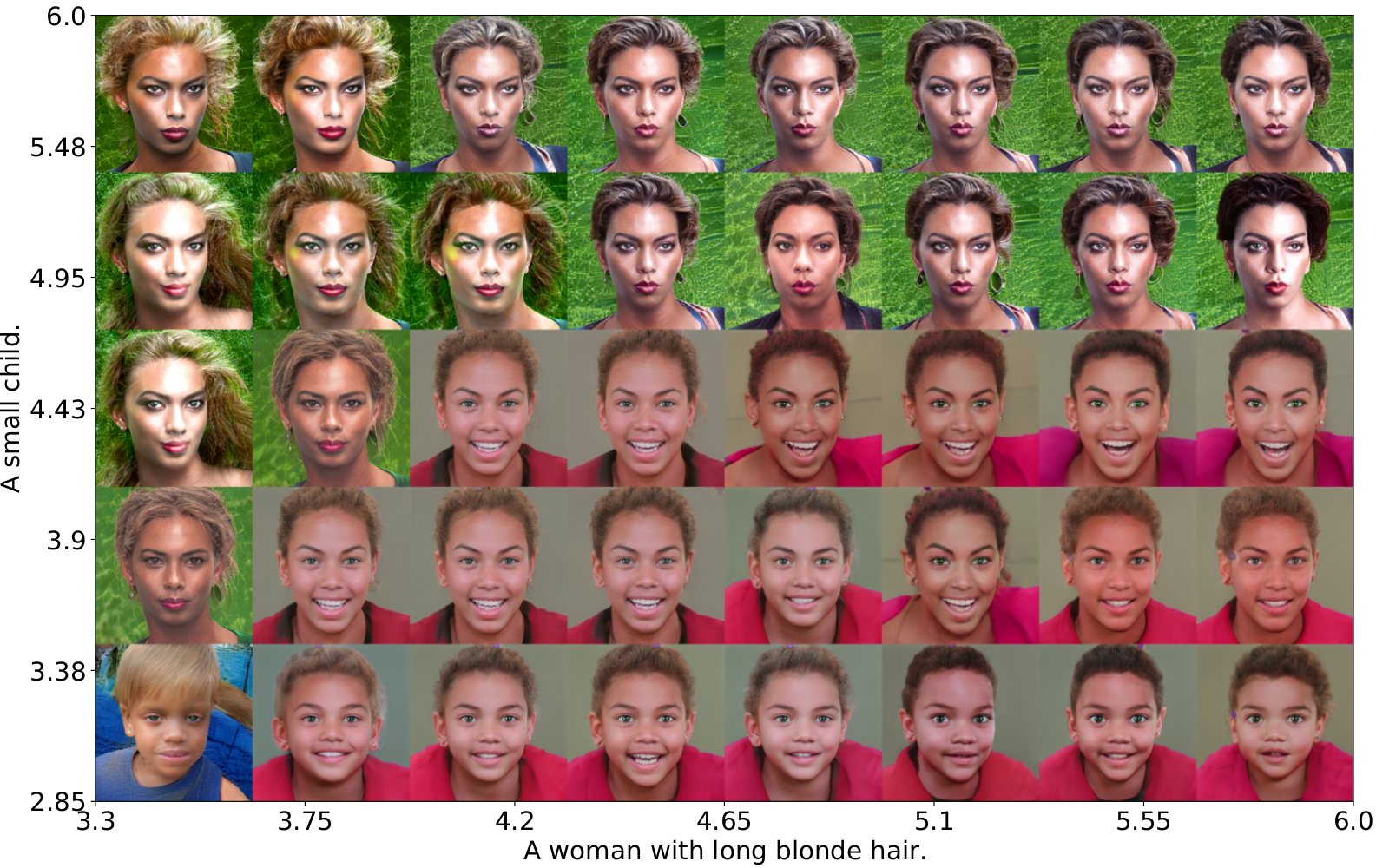

DQD StyleGAN+CLIP

Fontaine and Nikolaidis 2021, "Differentiable Quality Diversity." NeurIPS 2021 Oral.

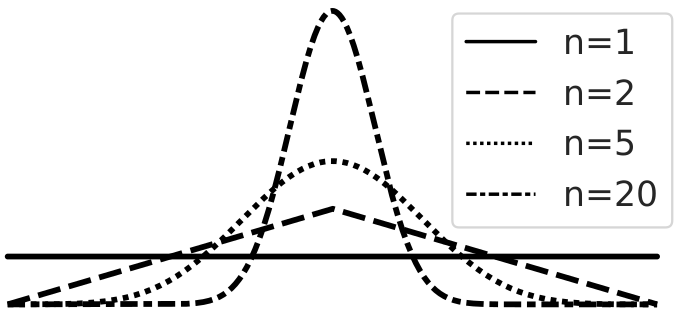

OpenAI-ES

∇f(ϕ)≈λesσ1i=1∑λesf(ϕ+σϵi)ϵi

TD3 Overview

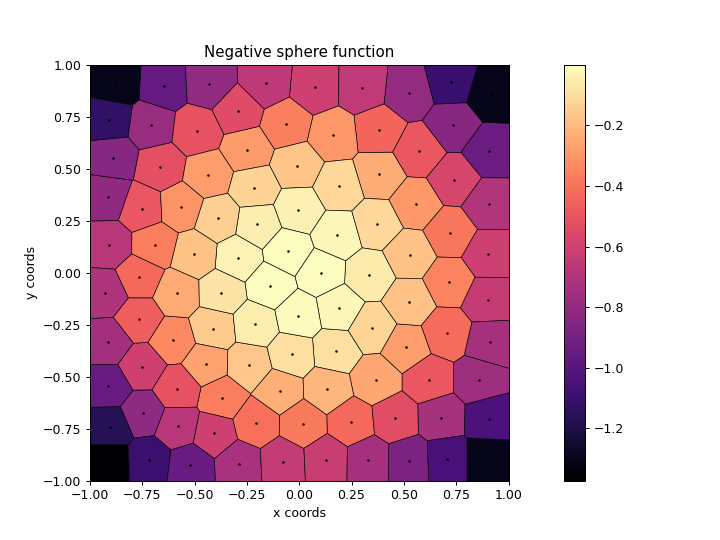

CVT Archive

Vassiliades et al. 2018. "Using Centroidal Voronoi Tessellations to Scale Up the Multidimensional Archive of Phenotypic Elites Algorithm." IEEE Transactions on Evolutionary Computation 2018.

Sliding Boundaries Archive

Fontaine et al. 2019. "Mapping Hearthstone Deck Spaces through MAP-Elites with Sliding Boundaries." GECCO 2019.