Running Distributed Quality-Diversity Algorithms on HPC

Bryon Tjanaka, ICAROS Lab | 16 February 2021

Article

Slides

Overview

- Distributed QD Algorithms

- Hardware

- Distributed Computations with Dask

- Additional Considerations

- Further Resources

- Demo

Distributed QD Algorithms

Regular QD

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) evaluate(solutions) insert_into_archive(solutions)

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) evaluate(solutions) insert_into_archive(solutions)

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) evaluate(solutions) insert_into_archive(solutions)

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) evaluate(solutions) insert_into_archive(solutions)

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) evaluate(solutions) insert_into_archive(solutions)

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) evaluate(solutions) insert_into_archive(solutions)

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) evaluate(solutions) insert_into_archive(solutions)

Distributed QD

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) distribute_evaluations(solutions) retrieve_evaluation_results() insert_into_archive(solutions)

archive = init_archive() for n in range(generations): solutions = create_new_solutions(archive) distribute_evaluations(solutions) retrieve_evaluation_results() insert_into_archive(solutions)

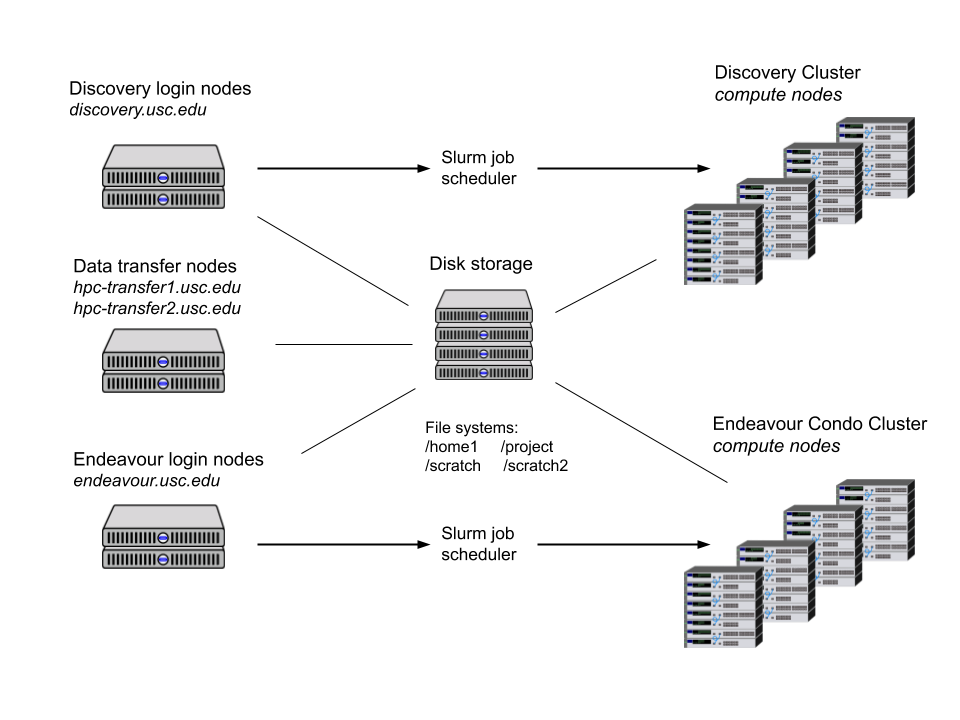

Hardware

Single-Machine

HPC

ssh USCNETID@discovery.usc.edu1 node

20+ cores

100+ GB RAM

sbatch job.slurm

job.slurm

Slurm config

(Bash) commands

Distributing Computation with Dask

- Python Library

- Workers + Scheduler

- TCP Connections

Running an Experiment on Dask

- Start scheduler job

- Start worker jobs

- Connect workers to scheduler

- Connect experiment to scheduler

- Repeatedly request evaluations

Additional Considerations

Integrating Dask into an Experiment

Environments / Containers

Configuration

Cluster

Algorithm

Running Robust Experiments

def evaluation():

logging.info("...")Logging and Monitoring

Reloading

save(reload_data, "tmp.pkl")

rename("tmp.pkl", "reload.pkl")Further Resources

Demo

Running Distributed Quality-Diversity Algorithms on HPC Bryon Tjanaka, ICAROS Lab | 16 February 2021 Article Slides